This article describes how fail safety works in Exasol on-premises installations.

If the hardware component or server where a cluster node is running fails, Exasol detects that a node is no longer available and triggers an automatic failover procedure.

The primary objective for the failover mechanism is data integrity. The failure of a hardware component does not cause data loss or data corruption. The hard drives of the cluster nodes are configured in RAID 1 pairs to compensate single disk failures without any interruptions. If the volumes are configured with redundancy level 2 (best practice and recommended by Exasol), each cluster node will replicate data to a neighbor node.

When Exasol is installed on physical hardware, the hot standby failover mechanism is used. With hot standby you have one or more reserve nodes standing by for the active nodes in your system. If a node fails, a reserve node immediately takes over for the failed node. The cluster operating system will automatically restart the necessary services on the new node provided that the corresponding resources (main memory, number of nodes, etc.) exist.

For more information about how to define reserve nodes, see Add Reserve Nodes.

Hot Standby

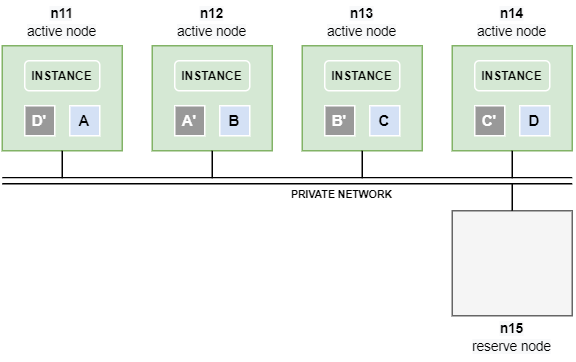

Example: 4+1 cluster with redundancy level 2

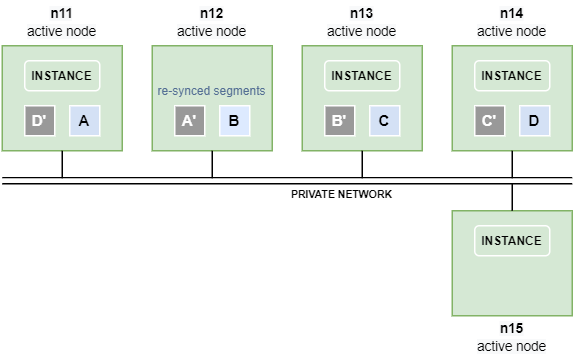

The following example shows an Exasol cluster with 4 active data nodes and one reserve node (4+1).

The volumes are configured with redundancy level 2, which means that each node contains a mirror of the data segments that are operated on by a neighbor node. For example, if node n11 modifies A, the mirror A‘ on the neighbor node n12 is synchronized over the private network.

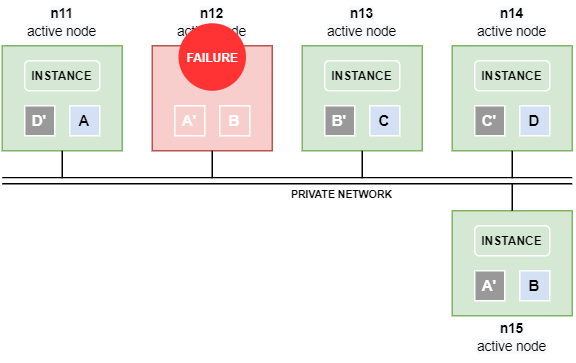

What happens on node failure

A node failure triggers the following automatic sequence of actions by

- The node failure is detected.

- All affected databases on the cluster are stopped.

- The reserve node is activated and the failed node becomes a reserve node for the affected databases.

- All databases are restarted.

- Database connectivity is restored.

What happens next depends on whether the failed node comes back online within a specific timeout period (transient node failure) or if it does not come back (persistent node failure). In case of a transient node failure, a complete restore of data segments from mirrors to the new active node will not be required.

Persistent node failure

If the failed node n12 does not become available again before the timeout threshold is reached (the default value is 10 minutes), the segments A‘ and B that resided on the failed node are automatically copied to n15 – the former reserve node – using their respective mirrors A on n11 and B‘ on n13.

Data is automatically copied between the nodes over the private network. If the private network has been separated into a database network and a storage network, data is copied over the storage network.

Copying data between nodes is time consuming and can put a significant load on the private network.

In case of a persistent node failure we recommend that you add a new reserve node to replace the failed node.

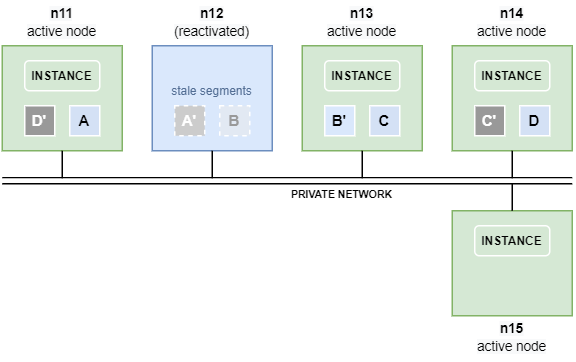

Transient node failure

If the failed node n12 comes back before the timeout threshold is reached a complete restore of the mirrors towards node n15 is not necessary, and segments will therefore not be copied between the nodes. The segments A’ and B on node n12 are however now stale, since their mirrors on nodes n11 and n13 have been operated on in the meantime. The segments therefore have to be re-synchronized before they can be used again.

Fast mirror re-sync

As soon as node n12 is back online, the stale segments are automatically re-synced by the cluster operating system, applying the changes on A and B‘ that were done on their respective mirrors while n12 was offline. This activity is much faster and less load-intensive than a complete restore of segments towards node n15.

The instance on node n15 now works on the segments residing on node n12, until the database is restarted.

Move data between nodes

Since restarting the database requires additional downtime, an alternative method that will not cause any downtime is to move data between the nodes using the ConfD job st_volume_move_node. For example:

confd_client st_volume_move_node vname: DATA_VOLUME_1 src_nodes: '[12]' dst_nodes: '[15]'In this case the segments residing on node n12 are moved to node n15.

Moving data is done over the private network. If the private network has been separated into a database network and a storage network, copying is done over the storage network.

Moving data between nodes is time consuming and can put a significant load on the private network.

Verify where the database node payload resides

Storage Failure

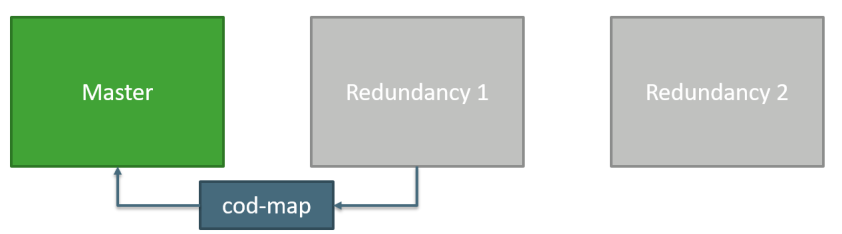

You can configure storage with redundancy as a fail-safety mechanism. The following example describes what happens if the storage fails when using a redundancy level 3 setup.

Initial situation

In this example there is one master segment handling all requests, and two redundancy segments (redundancy level 3).

Master segment fails

If the master segment fails, the following happens:

- The first redundancy segment becomes the deputy.

- Operation is redirected.

- Application continues to work.

Master segment is back online

When the master segment comes back online, the following happens:

- The initial master segment becomes the master segment again.

- Copy on demand on read operations are done.

- A background restore is done.