Cluster Nodes

Exasol runs on a cluster of powerful servers. Each server, or node, is connected to the cluster-internally through at least one private network, and is connected to at least one public network.

Hardware

The building blocks of an Exasol Cluster are commodity x86 Intel or AMD servers. SAS hard drives and Ethernet cards are sufficient. There is no need for an additional storage layer like a SAN.

For additional information on hardware and the minimum requirements for server hardware, refer to System Requirements section.

Disk Layout

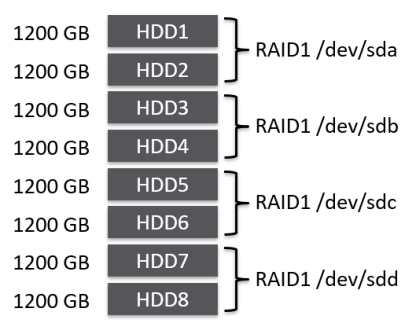

As a best practice the hard drives of Exasol Cluster nodes are being configured as RAID 1 pairs. Each cluster node holds four different areas on disk:

OS with 50 GiB size containing CentOS Linux, EXAClusterOS and the Exasol database executables

Swap with 16 GiB size

Data with 50 GiB size containing Logfiles, Coredumps and BucketFS

Storage consuming the remaining capacity for the hard drives for the Data Volumes and Archive Volumes

The first three areas can be stored on dedicated disks in which case these disks are also configured in RAID 1 pairs, usually with a smaller size than those that contain the volumes. More common than having dedicated disks is having servers with only one type of disk. These are configured as hardware RAID 1 pairs. On top of that software RAID partitions are being striped across all disks to contain OS, Swap and Data partition.

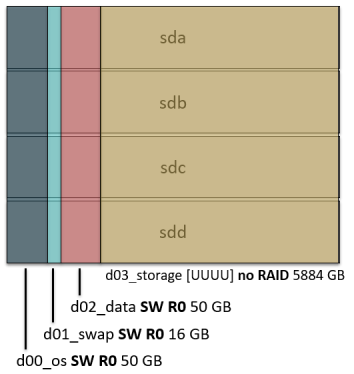

Sample disk layout:

One cluster node has 8 physical disks in this example. These 8 disks are configured as hardware RAID 1 pairs, resulting in 4 usable “disks” (sda,sdb,sdc,sdd) that can tolerate the failure of any one physical hard drive.

On top of that, the 3 areas OS, Swap and Data are striped as software RAID 0 across all 4 “disks”. The storage part is not using software RAID.

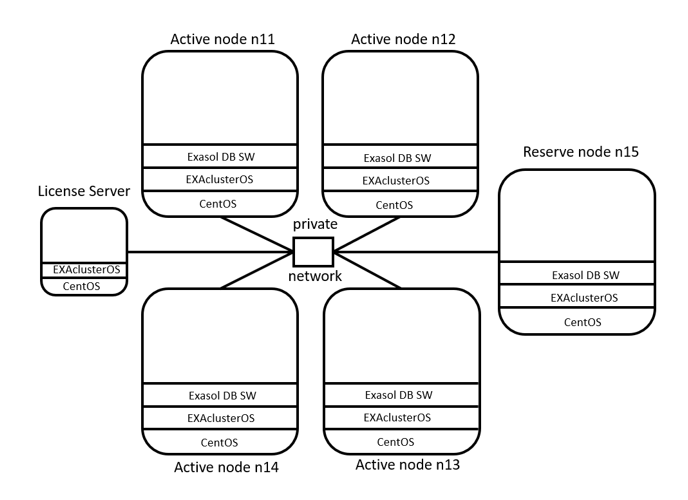

Exasol 4+1 Cluster: Software Layers

This popular multi-node cluster will serve as example to illustrate the concepts explained. It is called 4+1 cluster because it has 4 Active nodes and 1 Reserve node. These 5 nodes have the same layers of software available. Upon cluster installation, the License Server copies these layers as tar-balls across the private network to the other nodes. The License Server is the only node in the cluster that boots from disk. Upon cluster start-up, it provides the required SW layers to the other cluster nodes.