Purpose

Use this command to transfer data from Exasol into external files or database systems.

Prerequisites

- In Exasol, you need to have the system privilege EXPORT, plus the corresponding privileges to read the table contents.

- In the target system, you need to have the privileges to insert rows or writing files. You also need the rights to replace or truncate the target.

- When using a connection, you need to either have the system privilege USE ANY CONNECTION or the connection is granted by the GRANT statement to the user or to one of the user roles. For more information, refer to the CREATE CONNECTION section in Access Control Using SQL(DCL).

Syntax

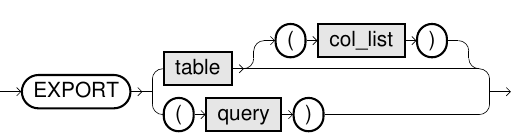

export::=

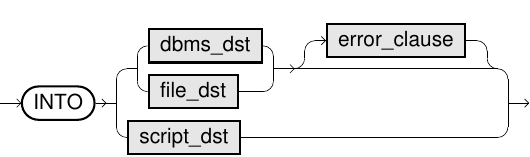

dbms_dst:=

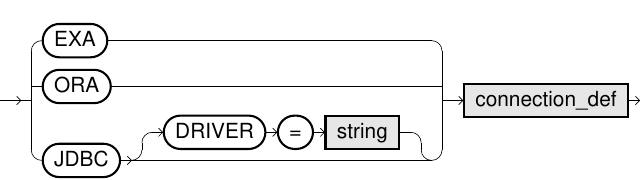

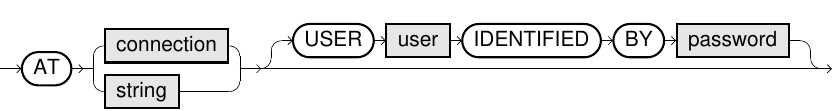

connection_def:=

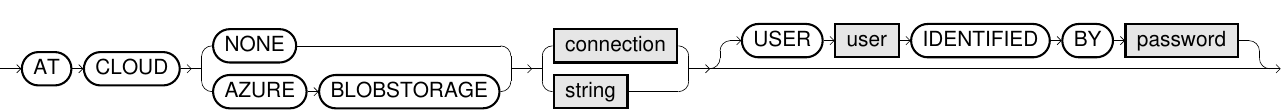

cloud_connection_def::=

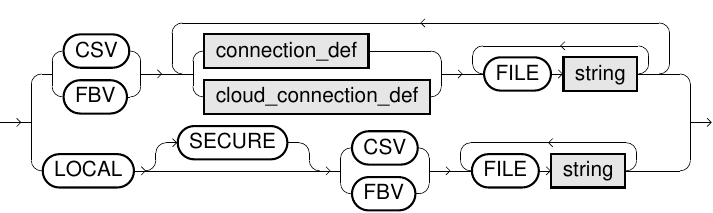

file_dst:=

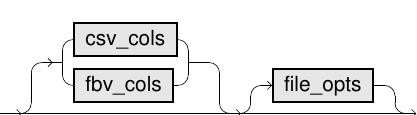

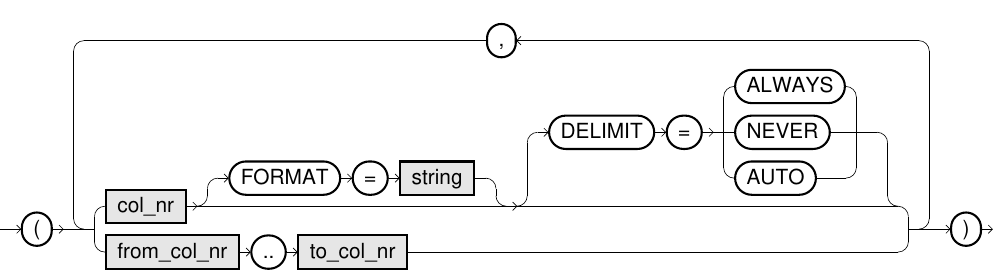

csv_cols:=

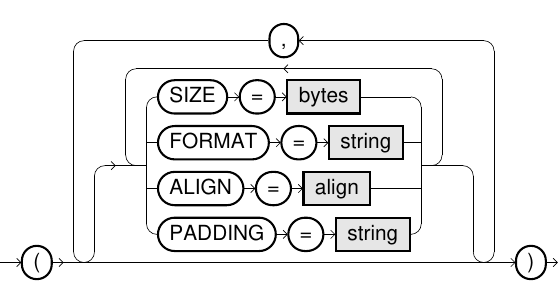

fbv_cols:=

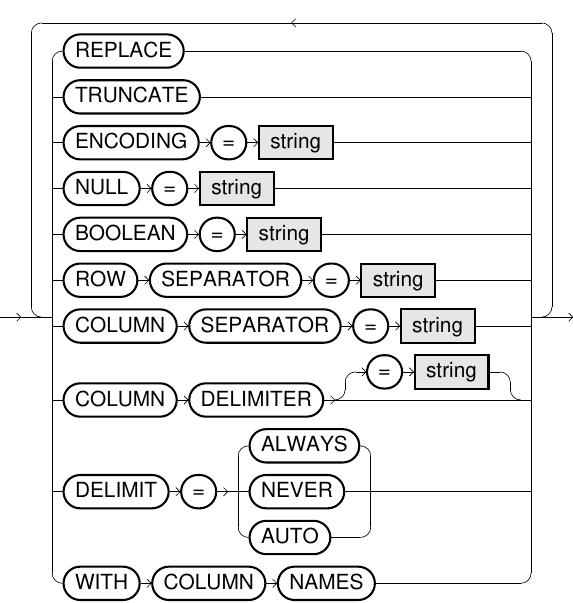

file_opts:=

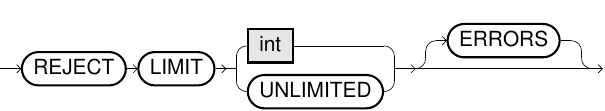

error_clause:=

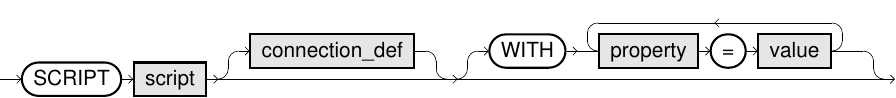

script_dst:=

Usage Notes

- The progress of the data transfer can be viewed using the system table EXA_USER_SESSIONS (column ACTIVITY) through a second connection screen.

- For additional information about ETL processes, refer to the ETL in Exasol section.

- If no other option is specified, the data is appended to the target.

- Only statements or views with ORDER BY clause on the top level are exported in sorted order (only in case of files).

- Certificates are not verified for encrypted connections.

- In case of URLs starting with “ftps://”, the implicit encryption is used.

- In case of URLs starting with “ftp://”, Exasol encrypts the user name and password (explicit encryption), if the server supports this. If the server requires encryption, then the whole data transfer is done encrypted.

- For FTP and FTPS servers, only passive mode is supported.

- For HTTP and HTTPS servers only basic authentication is supported.

-

For HTTP and HTTPS connections, HTTP query parameters can be specified by appending them to the file name.

Example:

FILE 'file.csv?op=OPEN&user.name=user' - 'LF'(Default): Corresponds to the ASCII character 0x0a (Unix)

- 'CR': Corresponds to the ASCII character 0x0d (Macintosh)

- 'CRLF': Corresponds to the ASCII characters 0x0d and 0x0a (Windows)

- 'NONE': No line break. This is only allowed in FBV files.

The following table provides you with an overview of the different elements and their meaning in the EXPORT command:

| Element | Meaning | ||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| data_src |

The source data can either be a table (as an identifier like MY_SCHEMA.MY_TABLE) or a query (like 'SELECT * FROM DUAL'). For tables, you can also specify the columns to be used. |

||||||||||||||||||||||||||||

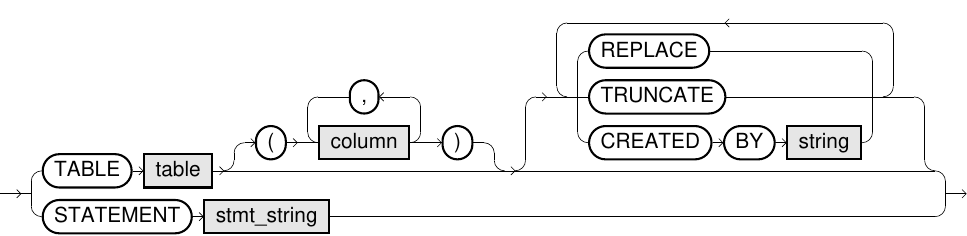

| dbms_dst |

Defines the database destination whose connection data is specified in the connection_def. You can choose among an Exasol connection (EXA), a native connection to an Oracle database (ORA) or a JDBC connection to any database (JDBC). Some JDBC drivers are included by default (visible in EXAoperation) and can be used within the connection string. You can additionally configure JDBC drivers in EXAoperation and choose them by the DRIVER option if its prefix is ambiguous. Only the pre-installed JDBC drivers (marked gray in EXAoperation) are tested and officially supported. If you need help with other drivers, contact support. For the target, you can define either a table or a prepared statement (for example, an INSERT statement or a procedure call). In the latter case, the data is passed as input data to the prepared statement. Please note that you have to use schema-qualified table names. Within the statement, use question marks as placeholders for the parameter values, for example: INSERT INTO t VALUES (?, ?, ?). When using the TABLE syntax (as opposed to STATEMENT), the table name identifier is treated similarly to Exasol tables. If your remote systems expect case-sensitive syntax, you must use quote marks to delimit the table names. Further options for the target:

|

||||||||||||||||||||||||||||

| file_dst |

Specifies the data file target. Remote Files: FTP, FTPS, HTTP, and HTTPS servers are supported if connection data is defined through the connection_def. The following are some of the considerations while using remote data file source: Local Files: You can also export to local files on your client system. For exporting local files, the JDBC driver opens an internal connection to the cluster and provides an HTTP or HTTPS (SECURE-Option) server. When specifying the SECURE option, the data is transferred encrypted, but also with slower performance. This functionality is only supported for EXAplus and the JDBC driver. It can not be used in prepared statements or within database scripts. The target file can either be CSV or FBV files and will then comply respectively to the format specifications

in the CSV Data Format or the Fixblock Data format (FBV). File

names may only consist of ASCII characters. A BOM Compressed files are recognized by their file extension. Supported extensions are .zip, .gz (gzip) and .bz2 (bzip2). When specifying multiple files, the actual data distribution depends on several factors. It is also possible that some files are completely empty. When the files are local, they are written into one file. Cloud Storage Service: You can export files to a cloud storage service. For more details, see cloud_connection_def. |

||||||||||||||||||||||||||||

| script_dst |

Specifies the UDF script to be used for a user-defined export. Optionally, you can define a connection or properties which will be forwarded to the script. The specified script will generate a SELECT statement internally that will be executed to do the actual export. The script has to implement a special callback function that receives the export specification (for example, parameters and connection information) and returns a SELECT statement. For more information, refer to User-defined EXPORT using UDFs section. connection_def Optional connection definition for being able to encapsulate connection information such as password. For more information, refer to the connection_def. WITH parameter=value... Optional parameters to be passed to the script. Each script can define the mandatory and optional parameters it supports. Parameters are simple key-value pairs, with the value being a string. For example: ... WITH PARAM_1='val1' PARAM_2 = 'val2'; |

||||||||||||||||||||||||||||

| connection_def |

Defines the connection to the external database or file server. It can be specified within a connection string (for example, 'ftp://192.168.1.1/') and the corresponding login information. For regular ETL jobs, you can also make use of connections where the connection data like username and password can easily be encapsulated. For more information, refer to the CREATE CONNECTION statement in Access control using SQL (DCL) section. The declaration of user and password within the EXPORT command are optional. If they are omitted, the data of the connection string or the connection object are used. For JDBC connections, it is possible to use Kerberos authentication by specifying specific data in the IDENTIFIED BY field. This data consists of a key which indicates that Kerberos authentication should be used (ExaAuthType=Kerberos), a base64 encoded configuration file and a base64 encoded keytab file containing the credentials for the principal. The syntax looks like the following: |

||||||||||||||||||||||||||||

| cloud_connection_def |

Defines a connection to a cloud storage service. This can be specified within a connection string and the corresponding authentication information. The connection string format may vary by cloud service. Example:

|

||||||||||||||||||||||||||||

| csv_cols |

Defines how the column data is written to the CSV file. For more information, refer to the CSV DATA Format section.

Example In this example, four columns are exported into the CSV file, and the column uses the data format specified for the CSV file. (1..3,4 FORMAT='DD-MM-YYYY') |

||||||||||||||||||||||||||||

| fbv_cols |

Defines how the column data is written to the FBV file. For more information, see Fixblock Data Format. The following elements can be specified in an FBV file:

Example In this example, four columns are exported into the FBV file. The first column is aligned to the right, and filled with 8 bytes with + characters, the fourth column has the specified data format. |

||||||||||||||||||||||||||||

| file_opts |

|

||||||||||||||||||||||||||||

| error_clause |

This clause defines how many invalid rows of the source are allowed. For example, in the case of REJECT LIMIT 5, the statement will work fine if there are less than or equal to five invalid rows and would throw an exception after the sixth row. REJECT LIMIT 0 has the same behavior when you have omitted the error clause completely. |

Examples

EXPORT tab1 INTO CSV

AT 'ftp://192.168.1.1/' USER 'agent_007' IDENTIFIED BY 'secret'

FILE 'tab1.csv'

COLUMN SEPARATOR = ';'

ENCODING = 'Latin1'

WITH COLUMN NAMES;

CREATE CONNECTION my_connection

TO 'ftp://192.168.1.1/' USER 'agent_007' IDENTIFIED BY 'secret';EXPORT (SELECT * FROM T WHERE id=3295) INTO FBV

AT my_connection

FILE 't1.fbv' FILE 't2.fbv'

REPLACE;EXPORT (SELECT * FROM my_view) INTO EXA

AT '192.168.6.11..14:8563'

USER 'my_user' IDENTIFIED BY 'my_secret'

TABLE my_schema.my_table

CREATED BY 'CREATE TABLE my_table(order_id INT, price DEC(18,2))';EXPORT tab1 INTO JDBC DRIVER='MSSQL'

AT 'jdbc:sqlserver://dbserver;databaseName=testdb'

USER 'agent_007' IDENTIFIED BY 'secret'

TABLE my_schema.tab1;EXPORT tab1 INTO CSV

AT 'http://HadoopNode:50070/webhdfs/v1/tmp'

FILE 'file.csv?op=CREATE&user.name=user';EXPORT tab1 INTO SCRIPT etl.export_hcat_table

WITH HCAT_DB = 'default'

HCAT_TABLE = 'my_hcat_table'

HCAT_ADDRESS = 'hcatalog-server:50111'

HDFS_USER = 'hdfs';Export data to an Amazon S3 bucket:

EXPORT tab1 INTO CSV

AT 'https://testbucket.s3.amazonaws.com'

USER '<AccessKeyID>' IDENTIFIED BY '<SecretAccessKey>'

FILE 'file.csv';Export data to an Amazon S3 bucket with a fully qualified bucket URL:

EXPORT tab1 INTO CSV

AT 'https://testbucket.s3-<region>.amazonaws.com'

USER '<AccessKeyID>' IDENTIFIED BY '<SecretAccessKey>'

FILE 'file.csv';Export data to Azure Blob Storage:

Encryption for Exporting File to AWS

The EXPORT statement supports server-side encryption with Amazon S3-Managed Keys (SSE-S3) and with Customer Master Keys (CMKs) Stored in AWS Key Management Service (SSE-KMS). To know about the server side encryption options available with AWS, see Protecting Data Using Server-Side Encryption.

Exasol doesn't support Server-Side Encryption with Customer-Provided Keys (SSE-C).

To enable the encryption, you need to add the following parameters in IDENTIFIED BY field of your statement:

| Parameter | Required | Description |

|---|---|---|

| secret-key |

The parameter is not required for temporary EC2 credentials. It is required for password authentication. |

Secret access key |

| sse_type |

It is required only for SSE-S3. |

aws:kms for SSE-KMS, AES256 for SSE-S3 |

| kms_key_id | It is required only for SSE-KMS. | KMS Key ID (ARN) to be used for encryption. |

| enc_context | The parameter is not required. | JSON-formatted string key-value pairs |

Examples

SSE-S3 encryption, user/password authentication:

EXPORT test_table INTO CSV AT 'https://testbucket.s3.amazonaws.com'

USER '<key-ID>' IDENTIFIED BY '<secret-key>;sse_type=AES256'

FILE 'testpath/test.csv';SSE-KMS encryption with encryption context, temporary credentials for EC2 role authentication: